Prompt Engineering Cookbook: The One-Two CoT Combo

Chain of Thought and Self Consistency Prompting

Chain of Thought (CoT) Prompting

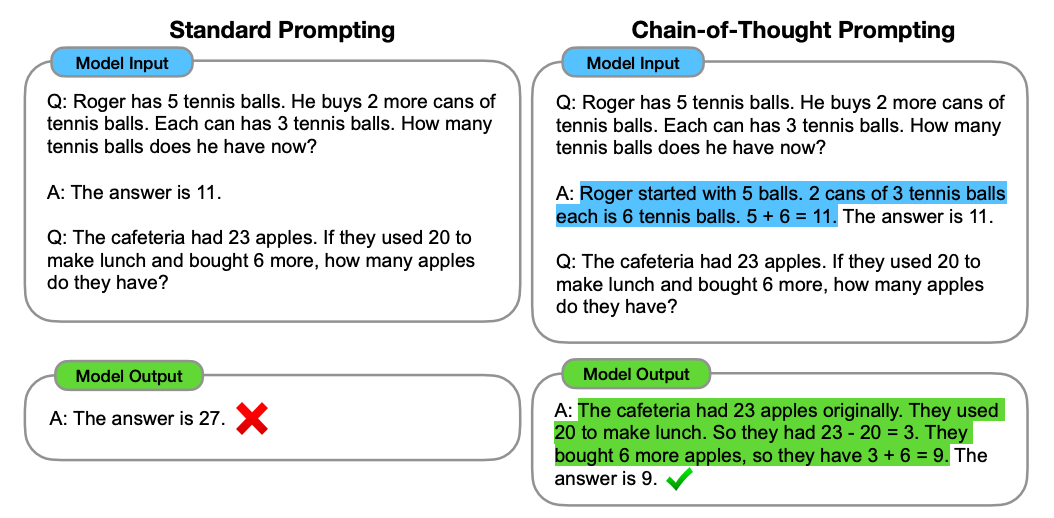

Chain of Thought (CoT) prompting is a method that enhances Large Language Models (LLMs) by guiding them through a step-by-step reasoning process. Instead of simply seeking direct answers, CoT involves prompting the AI to unfold its thought process, similar to working through a math problem step by step. This approach, which can include prompts like "Let's think step by step" or a series of logical reasoning steps leading to an answer, significantly improves the AI's accuracy on logic-based tasks. CoT's main advantage is its transparency, offering a clear insight into the AI's reasoning and making it easier for users to understand and verify the AI's conclusions.

When to Apply Chain of Thought (CoT) Prompting

Understanding Complex Queries: CoT prompting is invaluable when dealing with complex questions or problems where the answer requires multi-step reasoning.

Enhancing Problem-Solving Accuracy: Tasks involving logic, mathematics, or scenarios where a sequence of reasoning is necessary. It guides the AI to follow a logical sequence, significantly improving accuracy in problem-solving.

Clarifying the AI's Reasoning Process: In situations where understanding how the AI arrived at a conclusion is as important as the answer itself. It adds transparency to the AI's decision-making process, making it easier to evaluate the soundness of its reasoning.

Educational and Training Applications: For teaching or learning scenarios where illustrating the thought process behind an answer is beneficial. It helps in breaking down complex concepts into simpler, understandable steps.

Error Checking and Quality Assurance: When reviewing or debugging AI-generated content, CoT prompting can help trace the origin of errors or inaccuracies in the AI's output, providing a clear pathway to understanding and rectifying mistakes.

Innovative Problem Approaches: CoT prompting can help uncover novel solutions in creative or innovative fields where unique problem-solving approaches are valued. It encourages the AI to explore different angles and perspectives, often leading to unexpected and insightful answers.

Performance Benefits of CoT Prompting

To highlight the benefits of CoT prompting, I have outlined various results from a study called “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models” conducted by Jason Wei et al. from the Google Brain Team.

Enhanced Problem-Solving in Complex Scenarios: CoT prompting shows more substantial gains in complex problems. For instance, in the GSM8K dataset, which includes challenging grade-school math problems, there was more than a doubling of performance for the largest models (GPT and PaLM).

Robustness across Different Scenarios: CoT prompting showed consistency in its effectiveness across various scenarios. It maintained superior performance regardless of the annotator's style or the specific exemplars used, indicating its versatility.

Improved Commonsense Reasoning: When applied to commonsense reasoning tasks, such as the StrategyQA and Sports Understanding datasets, CoT prompting led to significant improvements. For example, PaLM 540B outperformed previous state-of-the-art results and even matched human-level performance in sports understanding.

Facilitation of Length Generalization: In symbolic reasoning tasks, such as last letter concatenation and coin flip problems, CoT prompting allowed the AI to generalize to inputs longer than in training, showcasing its adaptability to unseen scenarios.

Example Prompt

Notable Extensions of Chain of Thought (CoT) Prompting

A paper by Chen et al. highlights how Chain of Thought (CoT) prompting in Large Language Models (LLMs) has evolved with significant variations, enhancing its effectiveness and adaptability. Two notable extensions are "Zero-Shot Chain of Thought" and "Golden Chain of Thought."

1. Zero-Shot Chain of Thought (Zero-shot-CoT)

Description:

Zero-shot-CoT is an advanced iteration of CoT, allowing the model to perform reasoning without prior examples of the task.

This approach is facilitated by the phrase “Let’s think step by step,” which triggers the model to generate a sequential reasoning chain.

Performance:

Zero-shot CoT enhances the model's logical coherence and comprehensiveness, leading to more accurate responses.

Example:

Leveraging Zero-shot CoT Prompting for Complex Problems: A Personal Methodology

Zero-shot Chain of Thought (0S CoT) prompting has become a cornerstone in my toolkit, especially for tackling problems with multiple components. This technique shines in its ability to deconstruct a complex issue into manageable steps, setting the stage for a more focused and effective resolution strategy.

The Process:

Problem Decomposition: Initially, I present the Large Language Model (LLM) with a multifaceted problem, requesting a step-by-step breakdown. This initial decomposition serves as a scaffold, revealing the problem's core components through the AI's lens.

Refinement and Expansion: With the LLM's output as a foundation, I meticulously review each identified step. Here, I fill in logical gaps and introduce additional steps where necessary. A staple in my refinement process is the directive to "reanalyze the subject matter to ensure nothing was overlooked." This iterative step is crucial for a comprehensive understanding and solution approach.

Solution Synthesis: Armed with a refined list of steps, I then employ a CoT prompt, directing the LLM to tackle the problem anew, but this time, guided by the enhanced framework I've developed. This not only streamlines the solution process but also tailors the AI's approach to the unique contours of the problem at hand.

A Key Insight:

Through this approach, I've discovered the immense value of Zero-shot CoT in streamlining the creation of CoT prompts. It's like building a map before embarking on a journey, ensuring each step towards the solution is clear and deliberate.

A Word of Caution:

However, it's imperative to approach this method with a solid grasp of the problem domain. This understanding is critical for identifying any inaccuracies or gaps in the AI's logic, allowing for the creation of additional steps or logic checks as needed. Without this oversight, there's a risk of perpetuating overlooked errors or incomplete reasoning in the AI-generated solution.

Closing Thoughts:

This personalized methodology has enhanced my problem-solving efficiency and deepened my understanding of the iterative nature of logical reasoning with AI. It underscores the importance of active engagement and critical analysis in harnessing the full potential of Zero-shot CoT prompting.

2. Golden Chain of Thought

Description:

Golden CoT incorporates "ground-truth chain-of-thought" solutions within the prompt, significantly simplifying tasks, especially in abductive reasoning.

Performance:

In detective puzzles, GPT-4 using Golden CoT demonstrated an 83% solve rate compared to the 38% solve rate with standard CoT.

Limitations:

The Golden CoT relies on pre-supplied reasoning steps, indicating limited capability in solving divergent problems independently.

Paper: Unleashing the potential of prompt engineering in Large Language Models: a comprehensive review

Zero-Shot CoT Implementation Guide

Understand the Concept:

Zero-shot CoT involves prompting a language model to perform a task without seeing any specific examples during training.

It's like asking the AI to solve a problem based purely on its pre-existing knowledge and reasoning capabilities.

Formulate Your Question Clearly:

Start by defining the problem or question you want the AI to solve.

Ensure that your question is specific and clear. Ambiguous or vague questions can lead to less accurate responses.

Use a "Let's Think Step by Step" Prompt:

Before your main question, add the phrase “Let’s think step by step.”

This phrase encourages the AI to break down the problem into smaller, logical steps, leading to a more coherent and detailed answer.

Provide Relevant Context:

Although this is a zero-shot approach, giving some context can help guide the AI.

Include any relevant background information or parameters that are crucial to understanding the question.

Ask Your Question:

Follow the context and “Let’s think step by step” prompt with your actual question.

For example: “Let’s think step by step. If a restaurant serves 20 meals in an hour, how many meals can it serve in a day?”

Interpret the AI's Response:

The AI will respond with a series of logical steps leading to the final answer.

Review these steps to understand how the AI arrived at its conclusion, which can also offer insights into the reasoning process.

Evaluate the Answer:

Assess the AI's answer for logical consistency and accuracy.

Remember that while Zero-Shot CoT can be effective, it’s not infallible. Always use critical thinking to evaluate the AI’s response.

Refine as Needed:

If the answer is not satisfactory, try rephrasing your question or providing additional context.

Experiment with different phrasings or angles of approach to see how the AI's responses vary.

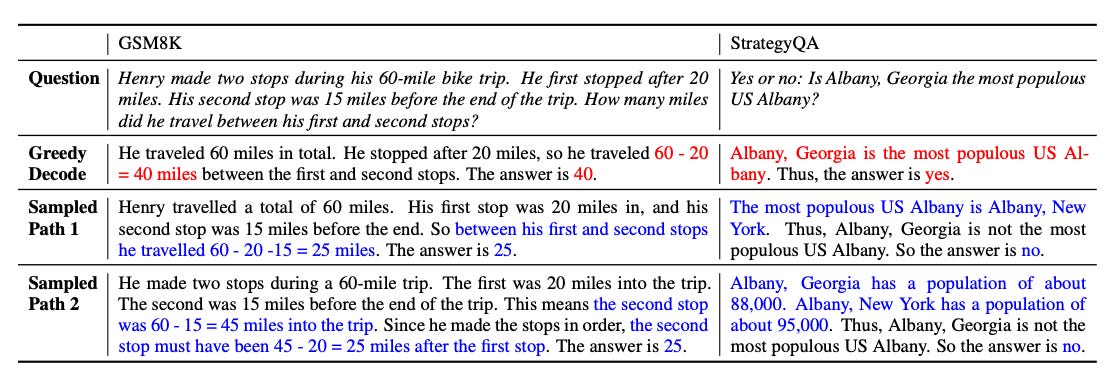

Self-Consistency Method:

The "Self-Consistency Method" in prompting is a sophisticated technique designed to enhance the accuracy and reliability of responses from Large Language Models (LLMs). It's based on the principle that a model’s answers to a series of related questions should be consistent and non-contradictory. This method is particularly effective in addressing challenges like proof planning and selecting the correct proof step in complex reasoning tasks, as observed in models like INSTRUCTGPT and GPT-3.

When to Apply Self-Consistency Prompting

The Self-Consistency Method in prompt engineering should be applied in scenarios where:

Complex Reasoning Tasks: The method is particularly effective for multi-step reasoning tasks, where multiple valid ways exist to approach and solve a problem. It is well-suited for situations requiring deeper analysis and deliberation to reach a correct answer.

Arithmetic and Commonsense Reasoning: The technique has shown significant improvements in accuracy for arithmetic reasoning tasks (e.g., complex math problems) and commonsense reasoning tasks (e.g., understanding everyday scenarios and making logical deductions).

Tasks with Multiple Reasoning Paths: It's ideal for problems that admit a variety of reasoning paths leading to the correct answer. This method leverages the diversity of reasoning paths to increase confidence in the final answer.

Fixed Answer Set Problems: Self-consistency is most effective for problems where the final answer is from a fixed set of possibilities, as it involves aggregating different answers to find the most consistent one.

Situations Requiring Improved Model Calibration: The method can provide more reliable and calibrated outputs from language models, making it useful for tasks where precision and accuracy are critical.

Collecting Rationale Data: If there's a need to collect reasoning paths or rationales behind model predictions, self-consistency can be useful. It can provide multiple reasoning paths that lead to a single answer, offering insights into the model's thought process.

When Standard Prompting is Insufficient: It’s beneficial when standard or greedy decode prompting methods do not yield satisfactory results, especially in tasks beyond mere fact recall and requiring logical deduction.

Performance Benefits of Self-Consistency Prompting

Self-consistent prompting has demonstrated significant performance enhancements for LLMs, particularly in tasks that require in-depth reasoning. Let's explore the specific performance improvements observed in "SELF-CONSISTENCY IMPROVES CHAIN OF THOUGHT REASONING IN LANGUAGE MODELS" by Wang et al.

Arithmetic Reasoning

UL2-20B: Self-consistency showed a notable improvement in accuracy compared to CoT-prompting, with gains ranging from +3.2% to +6.8% across different tasks.

LaMDA-137B: The method was particularly effective, with gains ranging from +9.1% to +23.9%.

PaLM-540B: Notable improvements were observed, including a significant +17.9% increase in GSM8K and +12.5% in AQuA.

GPT-3 Code-DaVinci-001 & 002: Self-consistency also improved performance, with gains up to +23.2% in MultiArith and +17.9% in GSM8K.

Commonsense and Symbolic Reasoning

UL2-20B: Gains were modest, with an increase of up to +6.8% in ARC-c.

LaMDA-137B: Improvements were more pronounced, with gains up to +5.2% in CSQA and +4.7% in ARC-c.

PaLM-540B: Consistent improvements, achieving +6.3% in StrategyQA and +3.5% in ARC-c.

GPT-3 Code-DaVinci-001 & 002: Gains of +8.3% in CSQA and +6.4% in StrategyQA demonstrated the effectiveness of the method.

Out-of-Distribution (OOD) Setting in Symbolic Reasoning

The method showed significant gains in this challenging setting, indicating its effectiveness even in complex scenarios.

Impact of Sampled Reasoning Paths

The number of sampled paths correlated with performance. Sampling a higher number (e.g., 40) consistently led to better results, emphasizing the importance of diverse reasoning paths.

Comparisons with Other Methods

Self-consistency outperformed other methods like sample-and-rank, beam search, and ensemble-based approaches across various reasoning tasks.

Robustness to Imperfect Prompts

The method showed resilience to imperfect prompts, repairing errors and improving accuracy.

General Observations

Self-consistency offers a more reliable and effective way to add rationales in few-shot in-context learning for common NLP tasks.

It is compatible with different sampling strategies and scales effectively with language model size.

The method is particularly useful for language models that are not perfect in reasoning, providing a richer set of reasoning paths and improving the robustness of imperfect prompts.

Paper: SELF-CONSISTENCY IMPROVES CHAIN OF THOUGHT REASONING IN LANGUAGE MODELS

Implementation Steps

Understand the Task: Begin by clearly defining the problem or question that needs to be answered. Ensure it's a task suitable for self-consistency prompting, such as a complex reasoning or arithmetic problem.

Prepare Chain-of-Thought Prompting: Start by prompting the language model in a chain-of-thought (CoT) manner. This involves asking the model to generate a series of short sentences that mimic human reasoning. For example, for a math problem, instead of directly seeking the answer, prompt the model to explain each step of the calculation.

Example: “If I have 3 apples and get 2 more, how many do I have? Let's think step by step...”

Generate Diverse Reasoning Paths: Instead of using the model’s first or 'greedy' response, use sampling methods to generate multiple reasoning paths. This can be done by setting the model to provide different possible explanations or solutions to the same problem.

Sampling Methods: Use techniques like temperature sampling, top-k sampling, or nucleus sampling. Adjust these settings to ensure a variety of answers are generated.

Aggregate and Choose the Most Consistent Answer: Once you have a set of different reasoning paths and their corresponding answers, it's time to find the most consistent answer. This typically involves looking for the answer that appears most frequently or is common across the different paths.

Majority Rule: If a particular answer appears most frequently in your sampled responses, it's likely the most consistent and, thus, the correct answer.

Interpret Results: Understand that while this method improves accuracy, it might sometimes generate incorrect or nonsensical reasoning paths. Be prepared to evaluate the results critically.

Manage Computational Resources: Remember that generating multiple reasoning paths requires more computational power. Start with a smaller number of samples to balance accuracy with resource usage.

Apply in Suitable Contexts: This method is particularly useful for tasks requiring deeper thinking and not simply about fact recall. Use it in contexts where multiple ways exist to reach a correct answer.

Example Prompt

Scenario: Arithmetic Problem "Imagine you have three apples, and you buy two more. How many apples do you have now?"

Step-by-Step Self-Consistency Prompting Implementation:

Understand the Task:

Task: Calculate the total number of apples after buying 2 more when you already have 3.

Suitable for self-consistency due to its arithmetic nature.

Prepare Chain-of-Thought Prompting:

CoT Prompt: "I start with 3 apples. Then, I buy 2 more apples. To find the total, I need to add these quantities. Let's do this step by step..."

Generate Diverse Reasoning Paths:

Use sampling methods to generate various solutions to the problem.

Example Sampled Responses:

"Starting with 3 apples, adding 2 more means 3 + 2. That gives a total of 5 apples."

"First, there are 3 apples. Buying 2 more, which is an addition operation. So, 3 apples plus 2 apples equals 5 apples in total."

"You have 3 apples initially. When you buy 2 additional apples, you add these 2 to the existing 3, making it 5 apples."

Aggregate and Choose the Most Consistent Answer:

Review the answers and find the common result.

In this case, all paths indicate that the total number of apples is 5.

Interpret Results:

Understand that the consistent "5 apples" answer across different reasoning paths increases the AI’s confidence in its correctness.

Manage Computational Resources:

If computational resources are a concern, limit the number of sampled reasoning paths.

Start with 5-10 samples to balance the trade-off between accuracy and resource usage.

Apply in Suitable Contexts:

This method is ideal for the given arithmetic problem as it involves logical reasoning where multiple paths can lead to the correct answer.

Outcome: After implementing self-consistency prompting, we find that the most consistent answer to the question "How many apples do you have after buying 2 more when you already have 3?" is 5 apples. This approach has allowed us to explore different reasoning paths and select the most reliable answer based on consistency.

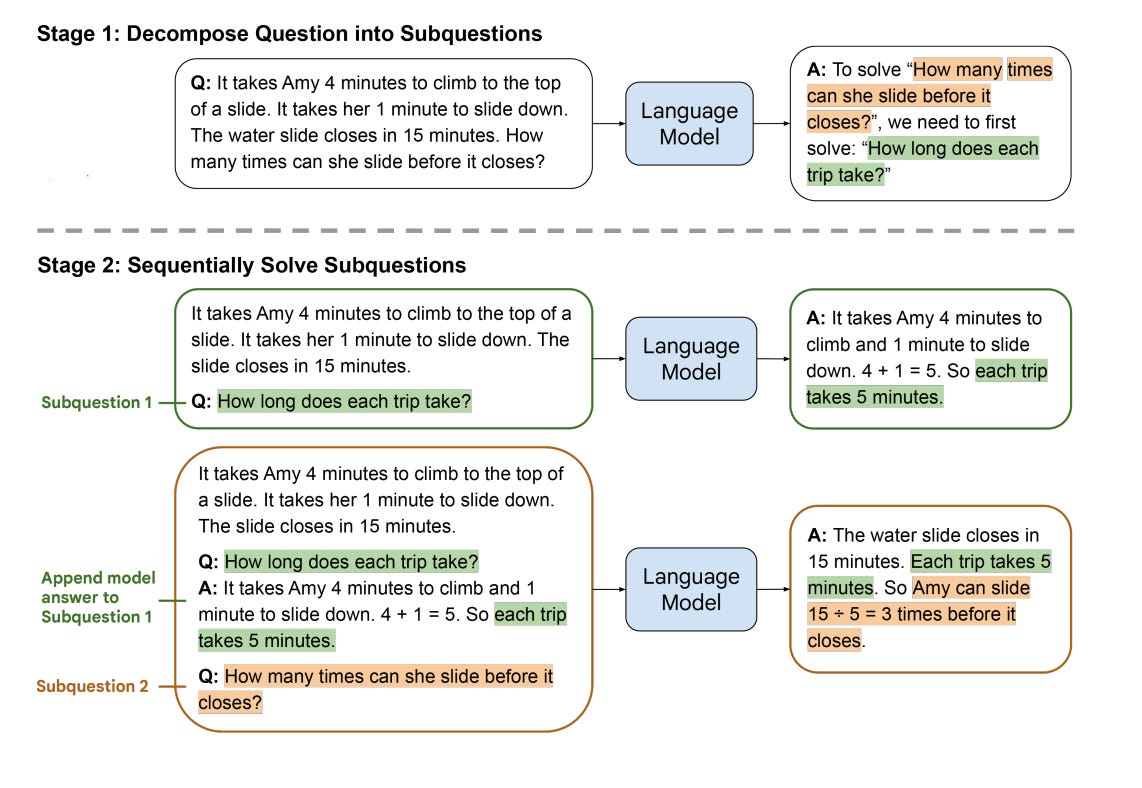

Least-to-Most Prompting:

The "Least-to-Most Prompting" strategy represents a sophisticated and nuanced approach within the domain of Large Language Models (LLMs). It's a method characterized by its gradual learning curve, enabling LLMs to handle increasingly complex tasks by building on simpler, foundational concepts.

Disclaimer:

Please remember that this cookbook is based on research and personal experience. I am not an expert in prompt engineering. Also, keep in mind that the field of prompt engineering is rapidly evolving, so make sure to conduct your own research to keep up with emerging techniques.